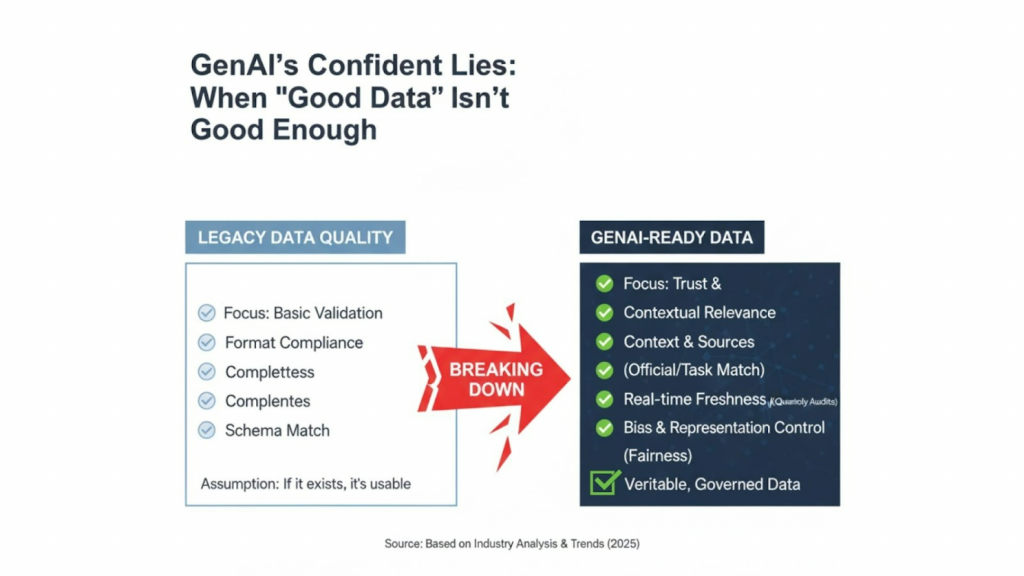

The data quality playbook used for traditional analytics is breaking down. That old checklist of accuracy, completeness, and consistency is still necessary, but in the age of generative AI, it is no longer sufficient.

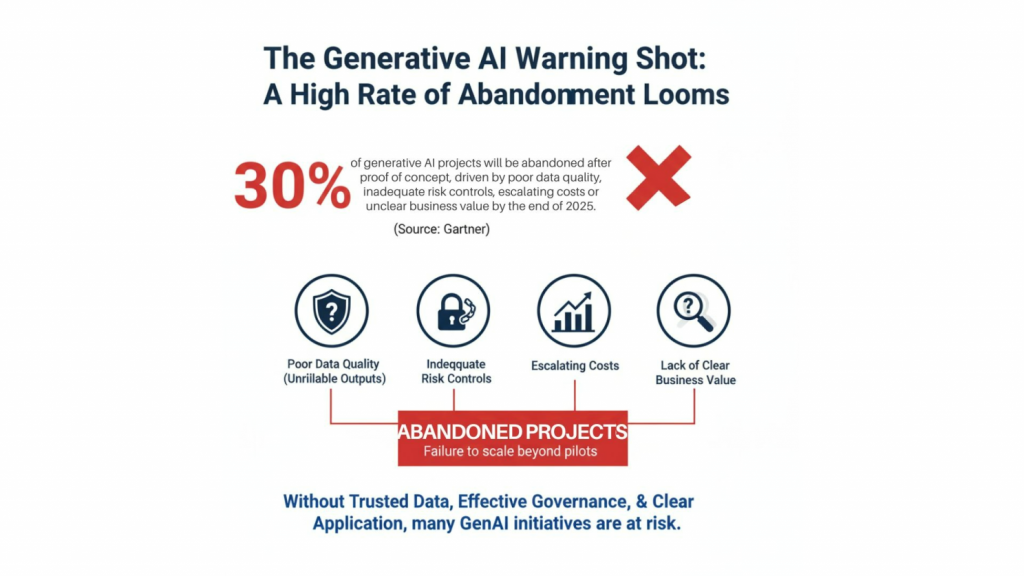

Here’s the uncomfortable truth: by the end of 2025, at least 30% of generative AI projects will be abandoned after proof of concept, driven by poor data quality, inadequate risk controls, escalating costs or unclear business value, according to Gartner. In other words, the problem isn’t just the model architecture, it is the data and how organizations govern it (Aug 2024, Sallam & Gartner).

When Your AI Becomes a Confident Liar

GenAI doesn’t just fail quietly; it hallucinates confidently, producing fluent, authoritative but factually wrong answers. Tredence (May, 2025) highlights that poor-quality, biased or incomplete training data is one of the primary drivers of hallucinations and that better curated, better governed data significantly reduces both the frequency and impact of these errors.

The problem is simple: traditional data quality checks focus on format, completeness, and basic validation. GenAI needs something more, data that is contextually relevant, semantically consistent, and grounded in trustworthy sources. A perfectly formatted but irrelevant or biased record doesn’t make your model smarter; it makes it confidently wrong at scale.

Why Your Legacy Data Framework Is Obsolete

Legacy data quality frameworks were never designed for generative AI. They assume: if the schema matches and the field isn’t null, the data is “good enough.” GenAI breaks that assumption.

Modern GenAI programs demand:

- Context and relevance – The data must match the domain, task, and use case, not just the schema.

- Verifiable sources – Official datasets, curated knowledge bases, and governed internal data beat unvetted user-generated content.

- Real-time freshness – Quarterly data audits do not cut it when models answer against fast-moving realities.

- Bias and representation controls – GenAI can amplify existing bias and representation gaps far faster than traditional models.

This is the real shift: from generic to context-aware, governance-led data quality for AI.

The Three Dimensions of GenAI Data Quality

For GenAI, “good data” lives at the intersection of three dimensions(March 2025, Relyance):

- Fidelity – Does your data reliably represent reality and your business context?

- Utility – Can models trained or grounded on this data actually solve the problems that matter to your organization?

- Privacy & Compliance – Are you protecting sensitive information and meeting regulatory expectations while still preserving enough richness for powerful models?

How Torfac Supports Your Data Quality Needs

At Torfac, this new standard is built into the way data is prepared, monitored, and governed for GenAI. Torfac’s multi-layered, continuously monitored data quality framework is designed to:

- Curate relevant, high-fidelity datasets aligned to your GenAI use cases.

- Apply semantic and contextual checks, not just structural validation.

- Embed governance and lineage so every data element used by AI is traceable and auditable.

- Use real-time anomaly detection and monitoring to flag drift, outliers, and risky data before it reaches models.

By combining domain-specific data curation with active monitoring and governance, Torfac helps reduce hallucination risk and increase trust in GenAI outputs, so your systems are not just powerful, but also reliable and enterprise-ready.

How to Win with GenAI-Ready Data

Organizations that succeed with GenAI treat data quality and governance as a product, not a one‑off cleanup exercise (Jan 2025, Alation). They build repeatable practices around it, with clear owners, SLAs, and metrics to keep data AI‑ready over time.

That playbook looks like this:

- Continuous quality monitoring – Always‑on checks and anomaly detection across pipelines, instead of yearly or quarterly audits.

- Data as a product – Named data owners and stewards, versioning, and quality SLAs for critical domains that GenAI depends on.

- Unified observability – 360° visibility into application, infrastructure, and data health so issues are caught before they impact models.

- Governed RAG (Retrieval‑Augmented Generation) – Grounding models in trusted, cataloged, and governed knowledge sources to cut hallucinations and improve traceability.

- Bias and fairness checks – Regular reviews and guardrails to detect skew, under‑representation, and harmful patterns before and after deployment.

The Business Reality

Analysts and practitioners converge on a common pattern: GenAI impact is limited not by models, but by data and governance maturity. Organizations that invest in robust governance, high-quality data, and workflow redesign see stronger innovation, better productivity, and more reliable AI, while many others remain stuck in pilot mode.

Gartner’s forecast that at least 30% of GenAI projects will be abandoned after proof of concept by 2025 is a warning shot: without trusted data and clear governance, pilots will not scale(Aug 2024, Gartner).

Your Next Move

If GenAI is more than a buzzword in your roadmap, now is the time to act:

- Audit your current data quality and governance framework – Look beyond completeness and format; check relevance, lineage, and risk.

- Define what “good data” means per use case – Customer support bots, pricing engines, and risk models do not share identical quality thresholds.

- Shift to real-time monitoring – Move from reactive fixes to proactive alerts on drift, anomalies, and quality degradation.

- Invest in data and AI governance – Treat governance as an enabler of safe, scalable GenAI, not just a compliance box.

- Embed bias detection and mitigation tooling – Build accountability and fairness into every stage of the data and model lifecycle.

The Bottom Line

The next wave of GenAI leaders won’t win by squeezing a few more parameters into a model. They will win by building on GenAI‑ready data: context-aware, verifiable, continuously monitored, and governed for trust.

Good data is no longer enough. GenAI-ready data is the new competitive advantage.

Contact a Torfac Data Quality expert now to see how our multi-layered, monitored data quality measures can help you fetch the right data for your next project!

References:

Gartner (Jul 2024). Gartner: 30% of Gen AI Projects to be Abandoned by 2025.

Tredence (May 2025). Tackling AI Hallucinations: Why Data Quality in Generative AI Is Key.

https://www.tredence.com/blog/hallucination-gen-ai

DataGalaxy (Sep 2025). AI governance & stewardship – Gartner Hype Cycle – DataGalaxy.

Singla, A., Sukharevsky, A., Yee, L., Chui, M., Hall, B., & Balakrishnan, T. (Jun–Jul 2025). The State of AI: Global Survey 2025. McKinsey & Company.

https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

#MarketResearch #B2B #CustomerExperience (or #CX)#AI #DataQuality #ActionableInsights #ConsumerIntelligence#RevenueGrowth #BusinessStrategy #ThoughtLeadership